System Science - Part 2: Drivers & Latency

There’s More To An Audio Interface Than The I/O.

Modern computers are fantastic recording devices. They can work with more audio and MIDI tracks than we’re ever likely to need. They allow us to manipulate audio in ways the engineers of 30 years ago could only dream of. They let us apply EQ, compression and effects to more channels than would be possible in any analogue studio. Yet it’s important to remember that computers are not built specifically for recording. There are challenges that have to be overcome in order for all this to be possible, and issues arising that were never a problem when we recorded to tape. The biggest of these issues is latency: the delay between a sound being captured and its being heard through our headphones or monitors.

From The Beginning

Let’s consider what happens when we record sound to a computer. A microphone measures pressure changes in the air and outputs an electrical signal with corresponding voltage changes. This is called an ‘analogue’ signal, because the the variations in electrical potential are analogous to the pressure fluctuations that make up the sound. A device called an analogue-to-digital converter then measures or ’samples’ this fluctuating voltage at regular intervals — 44,100 times per second, in the case of CD-quality audio — and reports these measurements as a series of numbers.

This sequence of numbers is packaged in the appropriate format and sent over an electrical link to the computer. Recording software running on the computer then writes this data to memory and to disk, processes it, and eventually spits it out again so that it can be turned back into an analogue signal by, you guessed it, a digital-to-analogue converter. Only then, assuming we’re monitoring what we’re recording, do we get to hear it.

This is quite a complex sequence of events, and it suffers from a built-in tension between speed and reliability. In a perfect world, each sample that emerges from the analogue-to-digital converter would be sent to the computer, stored and passed back to the digital-to-analogue converter immediately. In practice, however, this makes the recording system too sensitive to interruptions. Even the slightest delay in sending just one out of the millions of samples in an audio recording would cause a dropout.

To make the system more robust, we don’t record and play back each sample as soon as it arrives. instead, the computer waits until a few tens or hundreds of samples have been received before starting to process them; and the same happens on the way out. This process is called buffering, and it makes the system more resilient in the face of unexpected interruptions. The buffer acts as a safety net: even if something momentarily breaks up the stream of data coming into the buffer, it’s still capable of outputting the continuous uninterrupted sequence of samples we need.

The larger we make these buffers, the better the system’s ability to deal with the unexpected, and the less of the computer’s processing time is spent making sure the flow of samples is uninterrupted. The down side is that the larger we make these buffers, the longer the whole process takes; and once we get beyond a certain point, the recorded sound emerging from the computer starts audibly to lag behind the source sound we’re recording. In some situations this isn’t a problem, but in many cases, it definitely is! Where musicians are hearing their own and each others’ performances through the recording system, it’s vital that the delay never becomes long enough to be audible.

When latency creeps above a few milliseconds, it quickly becomes audible and can badly affect performers.

A delay between sound being captured and its being heard again at the other end of the recording system is called latency, and it’s one of the most important issues in computer recording. The time lag between playing a note and hearing the resulting sound through headphones is highly off-putting to musicians if it’s long enough to become audible, so this needs to be kept as low as possible without using up too many of the computer’s processing cycles. There are several different factors that contribute to latency, but the buffer size is usually the most significant, and it’s often the only one that the user has any control over.

Numbers Game

Buffer sizes are usually configured as a number of samples, although a few interfaces instead offer time-based settings in milliseconds. The choices on offer are normally powers of two: a typical audio interface might offer settings of 32, 64, 128, 256, 512, 1024 and 2048 samples. You can calculate the theoretical latency that a particular buffer size setting will give you by doubling this number — to reflect the fact that audio is buffered both on the way in and the way out — and dividing the result by the sampling rate. So, for example, at a standard 44.1kHz sample rate, a buffer size of 32 samples should in theory result in a round-trip latency in seconds of (32 x 2) / 44100, which works out at 1.45 milliseconds.

A latency this low would be completely imperceptible in practice, but unfortunately, it can’t be realised. For one thing, there are other factors that contribute to latency apart from the buffer size, and some of these are unavoidable (see box). For another, some audio interfaces ‘cheat’ by employing additional hidden buffers that are outside the user’s control. This means that although they might report very low latency figures to the recording software, these figures are not actually being achieved. Most importantly, however, reducing the buffer size forces the computer to devote more of its processing power to managing the audio input and output, and if we go too far, we risk running out of processing resources. One of the key challenges of audio interface design is to ensure that it’s actually possible to use low buffer sizes in practice, and there’s a lot of variation in how well different interfaces meet this challenge.

Misreporting of latency also brings problems of its own, especially when we want to send recorded signals out of the computer to be processed by external hardware. If we want any dry signal mixed in, as might be the case with parallel compression, this will be out of time with the processed signal, resulting in audible phasing and comb filtering. In order to line up the wet and dry signals correctly, the recording software needs to know the exact latency of the recording system.

If we want to integrate studio outboard at mixdown, it’s important that your audio interface correctly reports its latency to the host computer, especially if you want to set up parallel processing.

What Is A Driver?

In order for a meaningful transfer of data to take place between a computer and an attached interface, the computer’s operating system needs to know how to talk to it. This is made possible by software that interposes itself between the hardware and the operating system or recording software, and which includes a low-level program called a ‘driver’. (Technically, the driver is only a small part of the code that enables recording software to communicate with recording hardware. However, it’s common usage to refer to this code collectively as the ‘driver’.)

Computer operating systems usually come with a collection of drivers for commonly used hardware items such as popular printers, as well as generic ‘class’ drivers, which can control any device that is compliant with the rules that define a particular type of device. The USB specification, for instance, defines a class called ‘audio interface’. In theory, a hardware manufacturer could build a USB interface that met this class definition and not have to worry about writing drivers for it — although, as we shall see, there is more to it than this. Where no class driver is available, or where better performance is needed, a driver needs to be specially written and installed.

The driver and related software are critically important to achieving good low-latency performance. Well-written driver code manages the system’s resources more efficiently, allowing the buffer size to be kept low without imposing a heavy load on the computer’s central processing unit. The importance of drivers means it’s not possible to simply say that one type of computer connection is always better than another for attaching audio interfaces. Any technical advantage that, say, Thunderbolt has over USB is only meaningful in practice if the manufacturer can exploit it in their driver code.

Mac Vs Windows

In general, when software needs to communicate with external hardware, it does so through code built into the operating system, which in turn communicates with the driver for that particular device. One reason why Apple computers are popular for music recording is that Mac OS includes a system called Core Audio, which has been designed with this sort of need in mind. Core Audio provides an elegant and reasonably efficient intermediary between recording software and the audio interface driver. It supports essential features like multi-channel operation and does not add significant latency of its own. Mac OS even includes a built-in driver for class-compliant USB audio devices which offers fairly good performance, so many manufacturers of USB interfaces choose to use this rather than writing their own.

Mac OS X includes a sophisticated audio management infrastructure called Core Audio, which was designed partly with multitrack recording in mind.

Historically, this stands in contrast with the audio handling protocols built into Windows, such as MME and DirectSound. These not only add to the latency, but lack features that are vital for music production. So, when Steinberg developed the first native Windows multitrack audio recording software, Cubase VST, they also created a protocol called Audio Streaming Input Output. ASIO connects recording software directly to the device driver, bypassing the various layers of code that Windows would otherwise interpose. At the time when ASIO was developed, there was no other way of conveying multiple audio streams to and from an audio interface at the same time. More recent versions of Windows have introduced newer driver models and protocols, but ASIO remains a near-universal standard in professional music software. Note that as it’s not a Microsoft standard, Windows doesn’t include any ASIO drivers at all, so even class-compliant devices must be supplied with an ASIO driver for use with music software that expects to see one.

Writing efficient low-level software such as drivers and ASIO code requires specialist skills and expertise, and once written, they need to be maintained to remain compatible with the latest version of each operating system. This is a significant burden on manufacturers of audio interfaces, and many of them choose to license third-party code instead of writing their own. In the case of USB devices under Mac OS, as we’ve seen, this code is already built into the operating system; in other cases, it’s usually developed by the manufacturers of the chipsets — the set of components on the audio interface that handles communication with the computer.

This has obvious advantages for the manufacturer, but it also creates a chain of dependence which can cause problems. For example, most FireWire audio interfaces used a chipset designed by TC Applied Technologies, and licensed driver code from the same manufacturer. Eventually, this code became highly optimised and offered very good low-latency performance; but it took many years to reach this point, and in the meantime, there was little manufacturers reliant on that code could do to improve things.

It might not be obvious whether your audio interface uses a custom driver or a generic one, because the driver code operates at a low level and the user does not interact with it directly. Almost all recording interfaces come with a separate program, sometimes called a ‘control panel’, to provide user control over the various features of the interface. These control panel programs are invariably written by the audio interface manufacturers, so the fact that two interfaces each have a unique control panel utility does not mean that they don’t share the same generic driver code.

Avoiding Latency

How much latency is acceptable? There’s no simple answer to this question. Sound travels about one foot per millisecond, so in theory, a latency of 10ms shouldn’t feel any worse than moving 10 feet away from the sound source — and guitarists on stage are often further than 10 feet from their amps. Nevertheless, many players complain that even this amount of latency is detectable; and there are situations where much smaller amounts of latency are audible. In any situation where a player or singer is hearing both the direct sound and the recorded sound, for example, any latency at all will cause comb filtering between the two.

Some say that for a guitarist, a 10ms latency should feel no different from standing ten feet from his or her amp. Not everyone agrees!

When we’re using a MIDI controller to play a soft synth, the audio that’s generated inside the computer has only to pass through the output buffer, not the input buffer. In theory, this should mean the contribution of audio buffering to latency is halved, but in practice, the process of getting MIDI data into the computer also adds latency to the system. The amount of data involved is tiny compared with audio, but it still has to be generated at the source instrument, transmitted to the computer (usually, these days, over USB) and fed to the virtual instrument that is making the noise. All of these steps take a finite amount of time, and there is also the potential for ‘jitter’, whereby the latency is not constant but varies by a few milliseconds. MIDI latency is unlikely to be noticeable if you’re playing string pads from a keyboard, but it can be an issue where you’re triggering drum samples from a MIDI kit. Any system that employs pitch-to-MIDI detection, such as a MIDI guitar, is also prone to noticeable latency on low notes, as it needs to ‘see’ an entire waveform cycle in order to detect the pitch.

When we use a MIDI device to trigger audio in a software instrument, that audio only has to pass through the output buffer, so experiences only half of the usual system latency. However, the process of getting MIDI into the instrument in the first place can easily take just as long.

Avoiding The Issue

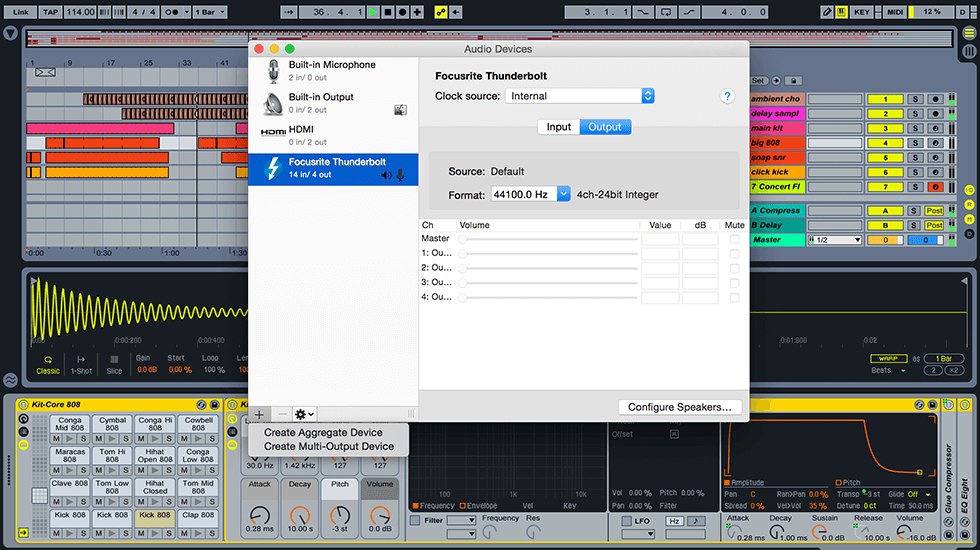

No digital recording system can be entirely free of latency. The only way to avoid latency altogether is to create a monitor path in the analogue domain, so that the signal being heard is auditioned before it reaches the A-D converter. This is common practice in large studios, where an analogue mixing console is often used as a ‘front end’ for a computer-based recording system. There are also small-format analogue mixers designed for the project studio that incorporate built-in audio interfaces. With this sort of setup, the mixer’s own faders and aux sends can then be used to generate cue mixes for the musicians which do not pass through the recording system at all, and thus are heard without any latency.

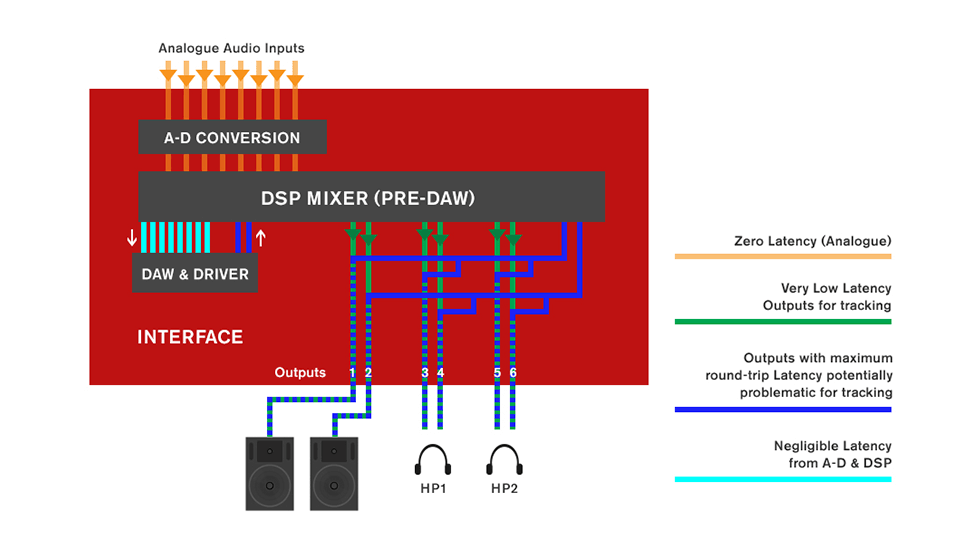

A block diagram showing input signals routed through an external mixer to set up a zero-latency monitoring path.

This type of arrangement has a lot to recommend it when you’re recording bands live. It makes it easy and quick to set up multiple different monitor mixes that can be routed to separate headphone amps, with no latency issues at all. However, not everyone has the space or budget for an analogue mixer and associated cables, patchbays and so forth. Routing signals through an analogue console can also affect sound quality, especially if it’s a budget model, and many people prefer the cleaner and simpler signal path you get by plugging mics and instruments directly into the audio interface.

Using an analogue mixer with a digital recording system makes it easy to set up zero-latency cue mixes for performers. It also helps keep the control room warm in winter!

With this in mind, most manufacturers build cue-mixing capabilities directly into their audio interfaces, recreating the same functionality but in the digital domain. Setting up these built-in digital mixers is usually the main function of the control panel utilities described earlier. This has the advantages of being much cheaper to implement, requiring no additional space or cabling, and not degrading the sound that’s being recorded. On the down side, although this approach reduces latency to levels that are usually imperceptible, it doesn’t eliminate it completely: the signal still passes through the A-D and D-A converters before it’s heard, and in a few cases, the digital cue mixer itself can introduce latency.

Block diagram showing input signals routed through a digital mixer within the interface to set up a low-latency monitoring path.

No Way Out

For the last fifteen years or so, almost all audio interfaces designed for multitrack recording have incorporated a digital mixer to handle low-latency input monitoring, as described above. However, the fact that it’s a widely used way of managing latency doesn’t mean that it’s the best way, and there are several problems with this approach. The first issue is that it adds to the complexity of the recording system. Rather than working entirely within a single recording program with its own mixer, the user is forced to constantly switch back and forth between recording software and the interface’s control panel utility. As a result, sessions take longer to set up, troubleshooting is more difficult, and there’s no way to use the cue mixes configured in the audio interface mixer as a starting point for final mixes in the recording software.

Tracks in your recording software have to be muted during recording, to avoid hearing the same signal twice, but unmuted when you want to play them back, and not all DAW software allows this to be done automatically. Moreover, many digital cue mixers and control panel utilities are poorly designed, inconsistent or difficult to use. Attempts have been made to tackle this problem by allowing the recording software’s mixer window to control the low-latency mixer in the interface. Steinberg’s ASIO Direct Monitoring is probably the most widely supported of these, but it is far from being a universal standard, and other solutions require the user to choose both hardware and software from the same manufacturer. Moreover, none of these address the remaining issues with this approach to avoiding latency. One of these is that in any setup where a separate mixer is being used to avoid latency, the signal is being monitored before it completes its journey into and through the recording system. This means that if any problem occurs further along in the recording chain, we won’t hear it until it’s too late. For instance, if we are monitoring input signals through an analogue console and the level is too hot for the audio interface it’s attached to, the recorded signal will be audibly and unpleasantly distorted even though what the artist hears in his or her headphones sounds fine.

If you’re not monitoring exactly what’s being recorded, you leave open the potential for things to go wrong in ways that can only be discovered when it’s too late.

Perhaps the biggest limitation with the workaround of using a mixer, though, is that it only works when the sound is being created entirely independently of the computer. An all-analogue monitoring path might be the best way for a singer to hear his or her own performance, but it’s of no use when we want to play a soft synth, or record electric guitar through a software amp simulator. It’s also no use when we want to give the singer a ‘larger than life’ version of his or her vocal sound through the use of plug-in effects. The only way to ensure that those sounds emerge promptly when we press a key or twang a string is to make the system latency as low as possible.

High Sample Rate, Low Latency?

As we’ve seen, the buffer size is usually set in samples. However, the duration of a sample depends on the sampling rate. So, if you’re recording at 88.2kHz, twice as many samples are measured and processed each second compared with standard 44.1kHz recording. In theory, then, doubling the sample rate should halve the system latency if you don’t change the buffer size, and this is sometimes recommended as a means of lowering latency.

Increasing sample rate can help lower latency in some circumstances, but it’s not a magic bullet.

In the real world, however, this is of limited use. The problem with most audio interfaces is not that low buffer settings aren’t available: it’s that they don’t perform as advertised, or that inefficient driver code maxes out the computer’s CPU resources at these settings. Doubling the sample rate also considerably increases the load on the computer’s resources, as well as generating twice as much data, so if a particular buffer size works for you at 44.1kHz, there’s no guarantee it will still work at 88.2 or 96 kHz. And in any case, we may want to choose a different sample rate for other reasons — most audio for video, for example, needs to be at 48kHz. Ultimately, the only solution to the problem of latency that isn’t an undesirable compromise is to reduce it to the point where it’s no longer noticeable. This has been achieved in the live sound world, where major gigs and tours are invariably now run from digital consoles. The key to achieving unnoticeably low levels of latency in the studio is to choose the right audio interface: not only one that sounds good and has the features you need, but which will be capable of running at low buffer sizes without overwhelming your studio computer.

Modern computers are the most powerful recording devices that have ever existed. But if we can’t hear what we’re recording in real time, without cumbersome workarounds, we are not getting the full benefits of that power. We might even be going backwards compared with the tape-based, analogue studios of forty years ago.

Further Reading: Measuring Latency

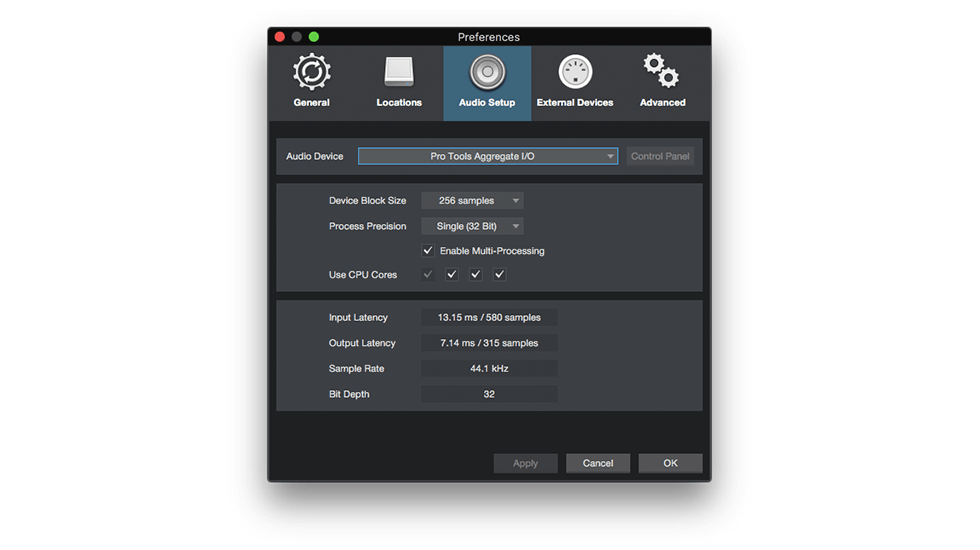

Audio interfaces are supposed to report their latency to recording software, and you’ll usually find a readout of this reported value in a menu somewhere. However, it’s important not to take this value as gospel. Some interfaces do report the true latency, but many under-report the actual value.

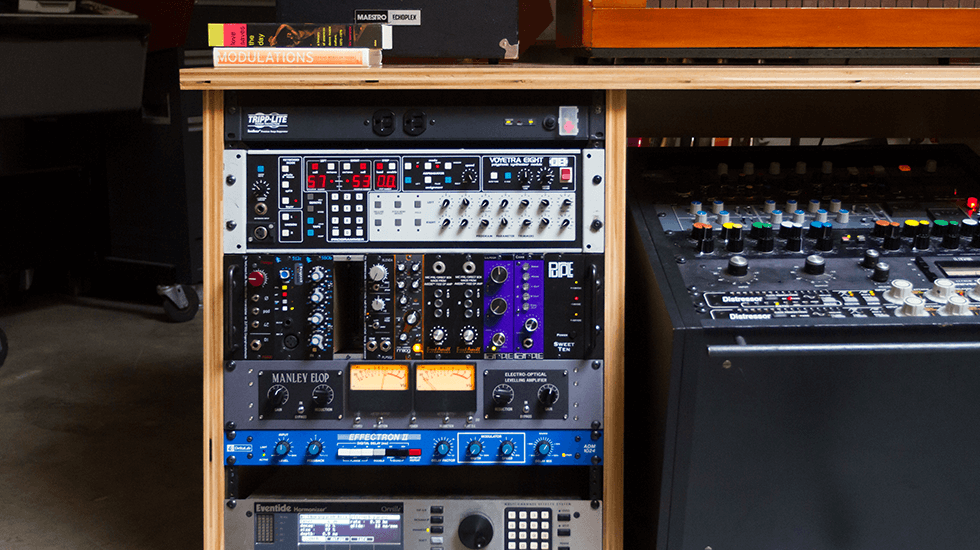

When it comes to latency, you can’t always believe what your audio interface is telling your recording software.

There are various ways of obtaining a reliable measurement of system latency. The very best of these is to use an entirely separate recording system. Load up an audio file that contains easily identifiable transients — a click track is perfect — and feed this to two outputs on the measurement system. Connect one of these directly back to an input on the measurement system, and route the second through the system under test. When these two inputs are re-recorded, the latency will be visible as a time difference between them. This is the best way to be certain that all the possible factors contributing to system latency are taken into account. If you don’t have a separate recording system handy, you can measure the round-trip latency by hooking up an output of your interface directly to an input (it’s a good idea to mute your monitors in case this creates a feedback loop). Again, you’ll need an audio file containing easily identified transients. Place this on a track in your DAW, route it to the output that is looped, and record the input that it’s looped to to an adjacent track. When you zoom in very closely, you’ll be able to see if the original and the re-recorded clicks line up. If they do, the latency that your DAW reports is accurate. If the re-recorded click is behind the original, then the true latency is equal to the reported latency plus the difference. However, the latency alone isn’t the whole story. On a given computer, two interfaces might both achieve the same round-trip latency, but in doing so, one of them might leave you far more CPU resources available than the other. It is hard to find a completely objective way of measuring this trade-off between latency and CPU load, but by far the most thorough attempt is DAWBench. Created by Vin Curigliano, this assigns audio interfaces a score based on their performance on a fixed test system, evaluating not only the actual latency at different buffer sizes but also the amount of CPU resources available.

Further Reading: Other Causes Of Latency

As mentioned in the main text, buffer size is usually the most significant cause of latency, and it’s often the one that is most easily controlled by the user. However, it’s not the only factor that contributes to the latency of a computer-based recording system.

Some of these other factors are inevitable. The process of ‘sampling’ an incoming analogue signal and converting it to a stream of digital data takes time, and so does the digital-to-analogue conversion at the other end of the signal chain. These delays caused by sampling are very small — well under 1ms — and make little difference to the overall latency, but there are circumstances when they are relevant, particularly when you have two or more different sets of converters attached to the same interface. This is the case when, for instance, you connect a multi-channel preamp with an ADAT output to an interface that has its own preamps and converters. In this situation, converter latency can mean the two sets of signals are fractionally out of sync — not enough to be a problem if they are carrying different signals, but conceivably a problem if for instance a stereo recording was to be split between the two.

A less well-known fact is that recording software itself adds a small amount of latency. Focusrite’s measurements have shown that there is some variability here, with Pro Tools and Reaper being the most efficient of the major DAW programs, and Ableton Live introducing more latency than most. Again, though, the total extra latency is very small, and typically well under 2ms.

What’s better known is that audio processing plug-ins can introduce latency. Indeed, there is a common belief that they all do, but this is only true in products that use a hardware co-processor to handle plug-ins, such as the Universal Audio UAD2 and Pro Tools HDX systems. The vast majority of ‘native’ plug-ins — that is, plug-ins which run on the host computer — introduce no additional latency at all, because they only need to process individual samples as they arrive.

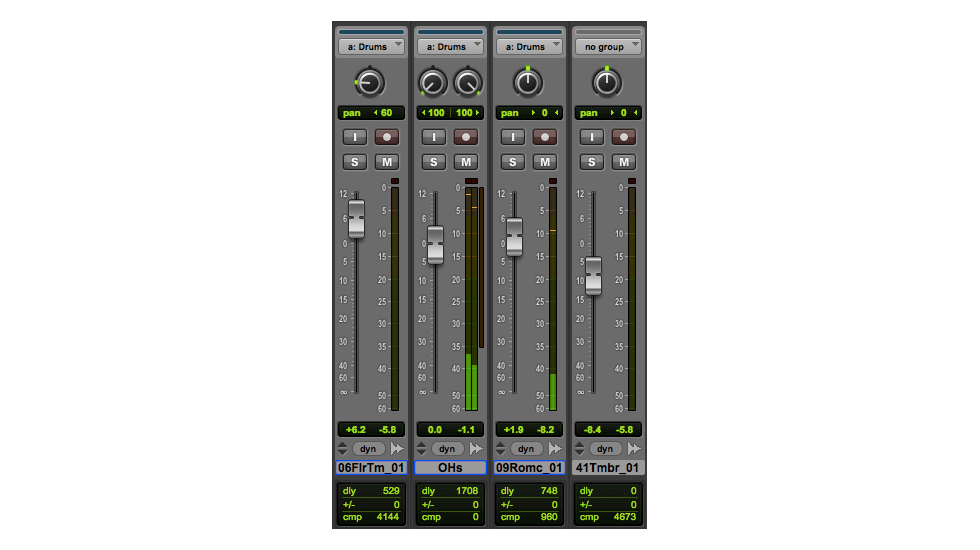

Some recording software, such as Pro Tools, reports any delay introduced by plug-ins to the user.

Processing plug-ins that add latency to the system typically fall into two groups: convolution plug-ins, including ‘linear phase’ equalisers, and dynamics plug-ins that need to use ‘lookahead’. In both cases, the plug-in depends on being able to inspect not just one sample at a time, but a whole series of samples. In order to do this, audio needs to be buffered into and out of the plug-in, adding further delay — and since most recording software applies ‘delay compensation’ to keep everything in sync, this delay is propagated to every track.

In general, it is therefore good practice not to introduce any plug-ins that cause delays until the mixing stage is reached, although not all recording programs make it easy to find out whether a particular plug-in adds extra latency. Some convolution plug-ins offer a ‘zero latency’ mode: this doesn’t actually eliminate the latency, but deliberately misreports it as zero to the host program, so that delay compensation doesn’t get applied. Finally, although the digital mixers built into many audio interfaces typically operate at zero latency, there are a handful of (non-Focusrite) products where this isn’t the case — so it can turn out that a feature intended to compensate for latency actually makes it worse!

Words: Sam Pryor